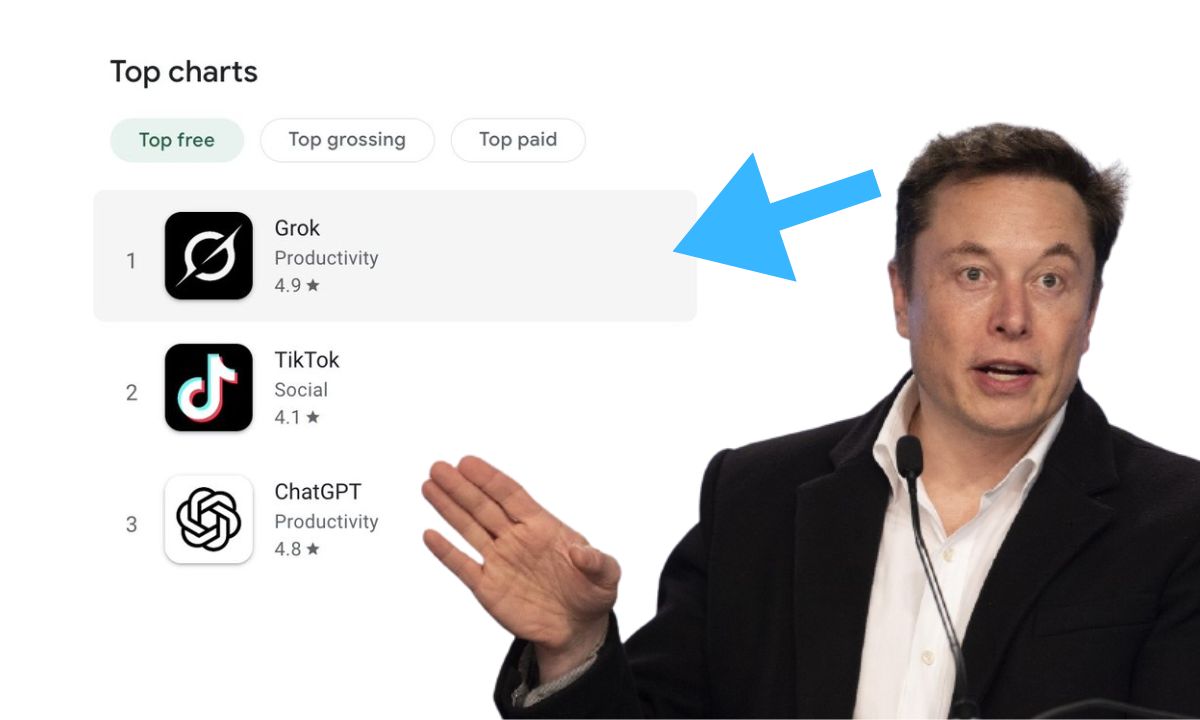

Debates over AI benchmarks and how they’re reported have become more public after accusations from an OpenAI employee that xAI, Elon Musk’s AI company, misrepresented the performance of its latest model, Grok 3. Igor Babushkin, a co-founder of xAI, defended the company’s claims.

xAI published a graph showcasing Grok 3’s performance on AIME 2025, a set of difficult math questions from a recent invitational exam. Although AIME is often used as a benchmark for math ability in AI models, some experts have questioned its validity. In the graph, xAI showed Grok 3 outperforming OpenAI’s best model, o3-mini-high, on AIME 2025. However, OpenAI quickly pointed out that the graph did not include o3-mini-high’s score at “cons@64.”

What is cons@64? It refers to the process of allowing a model 64 attempts to answer each benchmark question and taking the most frequent answer. This technique typically boosts a model’s score. By omitting this data, xAI made it appear as if Grok 3 outperformed o3-mini-high when, in fact, it didn’t.

When the cons@64 data is factored in, o3-mini-high’s performance on AIME 2025 surpasses Grok 3’s scores, and Grok 3 also slightly trails behind OpenAI’s o1 model. Despite this, xAI continues to advertise Grok 3 as the “world’s smartest AI.” Babushkin defended the company by highlighting similar practices from OpenAI in the past.

A neutral party compiled a more balanced graph, displaying the models’ performance at cons@64. As AI researcher Nathan Lambert noted, one critical metric still remains unclear: the computational and financial cost for each model to achieve its best score. This reveals a key issue with AI benchmarks, as they often fail to reveal a model’s true limitations or strengths.